AI Risk Management: What It Is and Why Businesses Need It

From fraud detection to payment approvals, artificial intelligence is powering critical parts of financial infrastructure. But here’s the problem: most organizations are adopting AI faster than they’re securing it.

If your business touches real-time payments, sensitive customer data, or compliance-heavy operations, managing AI risk isn’t just a checkbox—it’s survival.

In this blog, we’ll break down what AI risk management really means, how it differs from AI governance, and why frameworks like NIST, the EU AI Act, and ISO/IEC should already be part of your roadmap. Whether you’re leading product, compliance, or payments, this is the guide you need to future-proof your AI systems.

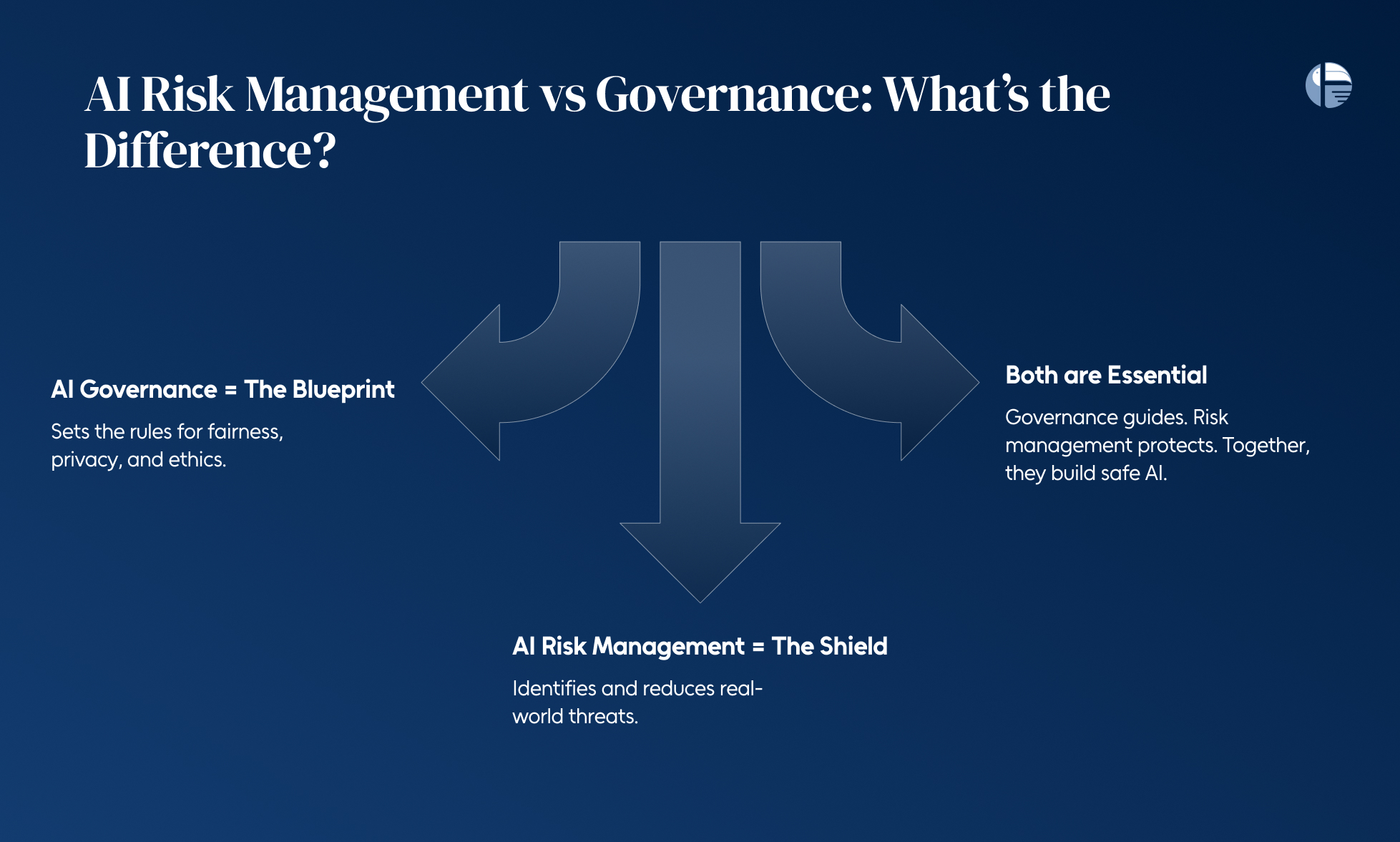

AI Risk Management vs Governance: What’s the Difference?

Let’s be honest—AI in business is evolving fast, and it can get overwhelming. You keep hearing about “AI risk management” and “AI governance” like they’re the same thing.

But they’re not, and if you’re building or using AI-driven systems—especially in high-stakes industries like payments, banking, or fintech—understanding the difference really matters.

So let’s break it down in simple terms:

- AI Governance Sets the Rules

Think of AI governance as the bigger picture. It’s the blueprint that guides how artificial intelligence is designed, developed, and used.

It answers the big questions:

- Is this system fair and unbiased?

- Does it respect user privacy?

- Are we following the right ethical and legal frameworks?

Governance is the why and how behind trustworthy AI. It’s about setting up the right policies so your business doesn’t just build fast—but builds responsibly.

- AI Risk Management Focuses on the Threats

Now, AI risk management lives inside that bigger governance framework. It’s more tactical—it’s about spotting potential problems before they hit.

For example:

- Could this algorithm be manipulated?

- Are we exposing ourselves to a compliance risk?

- Is this AI making decisions we don’t fully understand?

AI risk management is the hands-on part. It’s about identifying, assessing, and reducing threats so that the AI systems you rely on are secure, reliable, and safe to use.

- One Can’t Work Without the Other

Here’s the truth: good governance needs strong risk management—and vice versa.

If you only focus on governance without managing real-world risks, you’re building frameworks without safeguards. And if you only chase threats without a governance plan, you’re solving problems without direction.

To use artificial intelligence in business responsibly, you need both.

Why AI Risk Management Is Non-Negotiable

Let’s be clear: if your business uses AI in payments, compliance, or fraud detection, managing AI risk isn’t optional—it’s essential.

The speed at which AI systems are being deployed is staggering. But here’s the catch—most businesses still lack the controls to keep those systems in check. And when you’re dealing with sensitive data, money movement, and real-time decisions, the cost of “we’ll deal with it later” can be massive.

Here’s why AI risk management should be built into your operations from day one:

- AI Is Moving Faster Than Security

More companies are embracing AI for automation and cost savings, but that doesn’t mean they’re ready for the risks. Studies show that while adoption is high, most AI deployments lack basic safeguards.

That means unsecured data. Unchecked bias. Unmonitored outcomes.

If you’re not actively managing those risks, you’re building systems that could fail silently—or worse, publicly.

- Payment Systems Have Zero Margin for Error

In the payment authorization process, one wrong decision by an AI model could block a legit transaction—or let a fraudulent one slip through.

AI in payments is only as good as the controls around it. Risk management ensures those controls are in place, tested, and updated regularly.

- Compliance Isn’t a Future Problem—It’s a current requirement

Regulators are already cracking down on AI governance. If your system makes decisions without transparency or auditability, you’re at risk of penalties, lawsuits, or reputational damage.

AI risk management ensures your tools don’t just work—but work responsibly.

- Customers Expect Safety, Not Just Speed

Fast decisions aren’t helpful if they’re wrong. If your AI denies someone a payment or flags a false fraud alert, that’s a direct hit to user trust.

Managing AI risk helps you build systems that are not only smart—but fair, reliable, and safe to scale

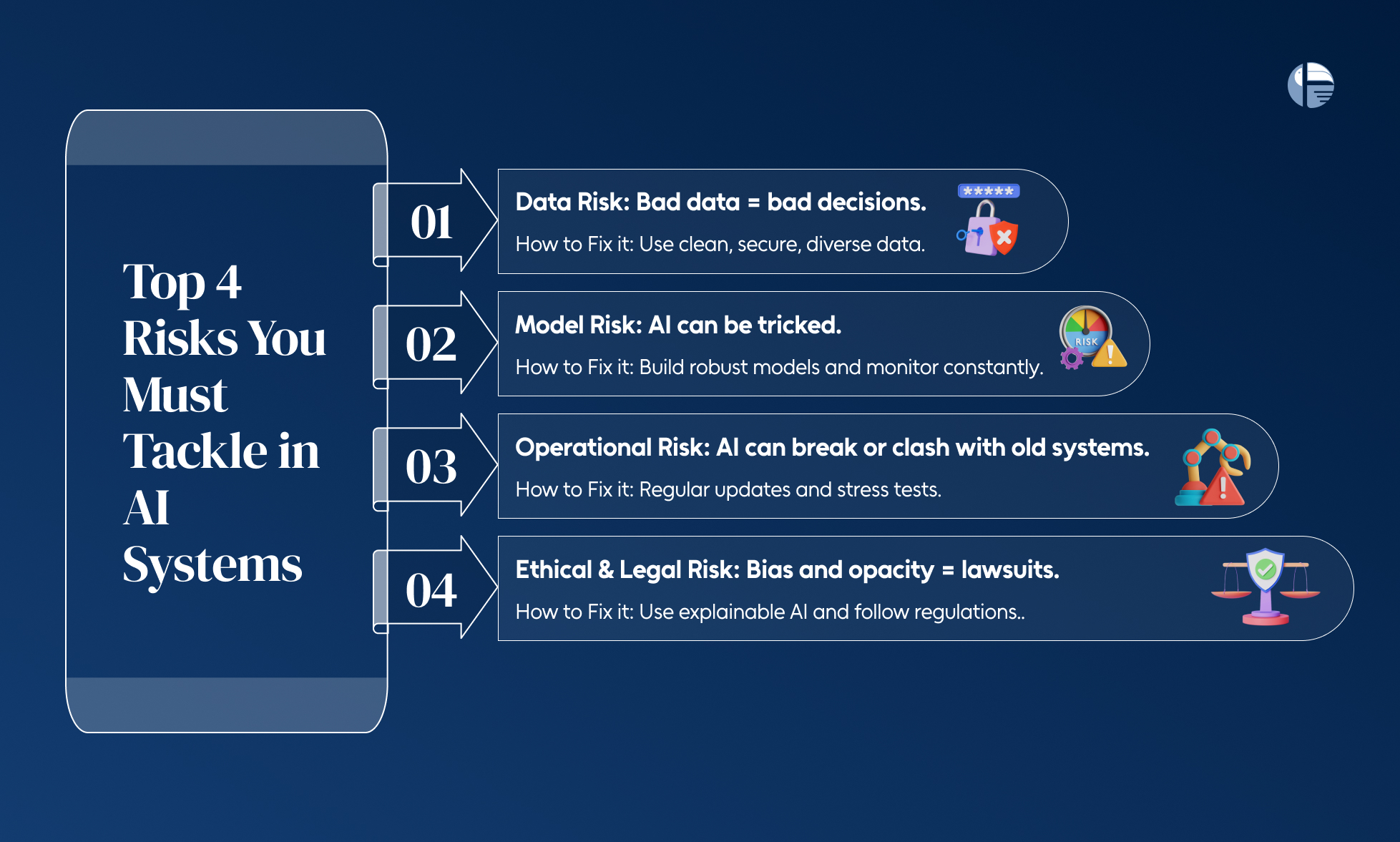

Top 4 Risks You Must Tackle in AI Systems

If you’re thinking AI will solve all your problems, think again. AI systems—especially in high-stakes sectors like payments, banking, and credit—come with serious risks that can’t be ignored.

Let’s break down the top four you must address for safe and compliant AI adoption.

- Data Risk: Bad In, Worse Out

AI is only as good as the data it feeds on. If your training data is biased, outdated, or breached, your AI model could end up approving fraudulent transactions—or denying legit ones.

How to mitigate it: Regular audits, encrypted storage, and diverse datasets are non-negotiable.

- Model Risk: When AI Gets Tricked

AI models can be manipulated—through adversarial attacks or sneaky prompt injections. The result? Misleading outputs, data leaks, or unauthorized access.

How to mitigate it: Build tamper-proof models and monitor real-time performance.

- Operational Risk: What Happens When AI Breaks?

AI systems can decay over time. They might miss fraud patterns or create friction in payment flows. Worse, they can clash with legacy infrastructure.

How to mitigate it: Plan for model updates, stress-test systems, and ensure seamless integration.

- Ethical & Legal Risk: Bias and Blowback

From biased loan approvals to unclear audit trails, AI can cross ethical lines fast—especially in financial services. If you’re not transparent, compliant, and explainable, you’re opening doors to lawsuits and reputational damage.

How to mitigate it: Invest in explainable AI and align with evolving regulations like the EU AI Act and India’s DPDP.

Breaking Down AI Risk Management Frameworks

Let’s face it—AI can be a game-changer, but only if it doesn’t backfire. From biased credit decisions to payment approval errors, the risks are real. That’s where AI risk management frameworks come in.

Think of these as safety blueprints. They guide companies—especially those in high-stakes industries like payments and banking—on how to build, test, and run AI without letting it spiral out of control.

Here are four frameworks every FinTech leader should know:

- NIST AI Risk Management Framework (AI RMF)

If you want a structured, industry-agnostic playbook, NIST is where to start. Built in 2023 by the U.S. National Institute of Standards and Technology, this framework is all about trustworthy AI.

It breaks AI risk management into four core actions:

- Govern: Build an internal culture that takes AI risk seriously.

- Map: Identify where AI can go wrong in your specific use case.

- Measure: Assess how severe the risks are—quantitatively, not just qualitatively.

- Manage: Put controls in place to prevent or fix issues quickly.

- EU AI Act

Europe’s no-nonsense approach to AI regulation is shaking things up—and it’s especially relevant if your business touches EU users or markets.

This act classifies AI systems into risk tiers (minimal, limited, high, and unacceptable). Payment systems that use AI for credit scoring, fraud detection, or customer verification? These usually fall under “high risk.”

What it means for you:

-

- Be ready to explain how your AI works.

- Ensure your systems don’t discriminate or violate user rights.

- Keep detailed audit logs—compliance will be non-negotiable.

- ISO/IEC AI Standards

These international standards are all about consistency and ethics. If you’re deploying AI across multiple regions or with enterprise clients, ISO/IEC guidelines help you stay compliant globally.

The focus?

- Transparent decision-making (no black boxes).

- Ethical data usage.

- Risk controls across the entire AI lifecycle—from design to deployment

- U.S. Executive Order on AI (2023)

The U.S. government’s recent executive order adds teeth to national AI oversight. It pushes for responsible innovation while safeguarding national interests.

For FinTechs and payment processors, this means:

- Increased scrutiny on how AI impacts consumer financial data.

- A push toward testing, transparency, and safety certifications.

- Encouragement to align with NIST or similar frameworks.

AI won’t slow down—but your risk strategy better keep up.

As adoption surges across the financial and payment sectors, the organizations that stand out won’t just be fast—they’ll be secure, ethical, and resilient.

Understanding the difference between AI risk management and AI governance isn’t just semantics. It’s the foundation of safe AI deployment in high-risk use cases like payment authorization, fraud detection, and credit scoring.

Start with frameworks. Bake in risk controls. And build AI that doesn’t just work—but works responsibly.

Because in payments, one wrong AI decision isn’t just a bug—it’s a business risk.

At Toucan Payments, we’re building AI-driven solutions you can trust—designed with responsible risk practices, real-time controls, and transparency at every layer.

Discover how we make AI in payments not just powerful—but dependable. LEARN MORE